At the Speed of Conversation

When we set out 2+ years ago to build a game based around your voice as the input, one thing was clear: It needed to work differently than in the past; it needed to feel natural. So, no list of magic commands, no waiting for responses, no complex tutorialization.

At the time, we felt it would finally be possible soon based on the advancements in machine learning (text-to-speech, reasoning, and speech-to-text). The last 2+ years have been very challenging and required a lot of educated guesses of where things were going to be by the time we were ready to launch.

For one, when we started working on this, I calculated that to run it would cost us $23 per hour/per user. This laughable number was many orders of magnitude away from a cost that would allow a game to be built. But we had confidence that things were moving much faster than Moore’s law and we were proven to be correct. Fast forward to today we are able to ship a paid title with only a fraction of the cost going to this infrastructure.

Additionally, when we started, speech-to-text wasn’t accurate enough, LLMs weren’t smart enough, and text-to-speech wasn’t nearly high enough quality. Fortunately again we had confidence there would be huge improvements and there has been. They have all improved by leaps and bounds making what we were building possible.

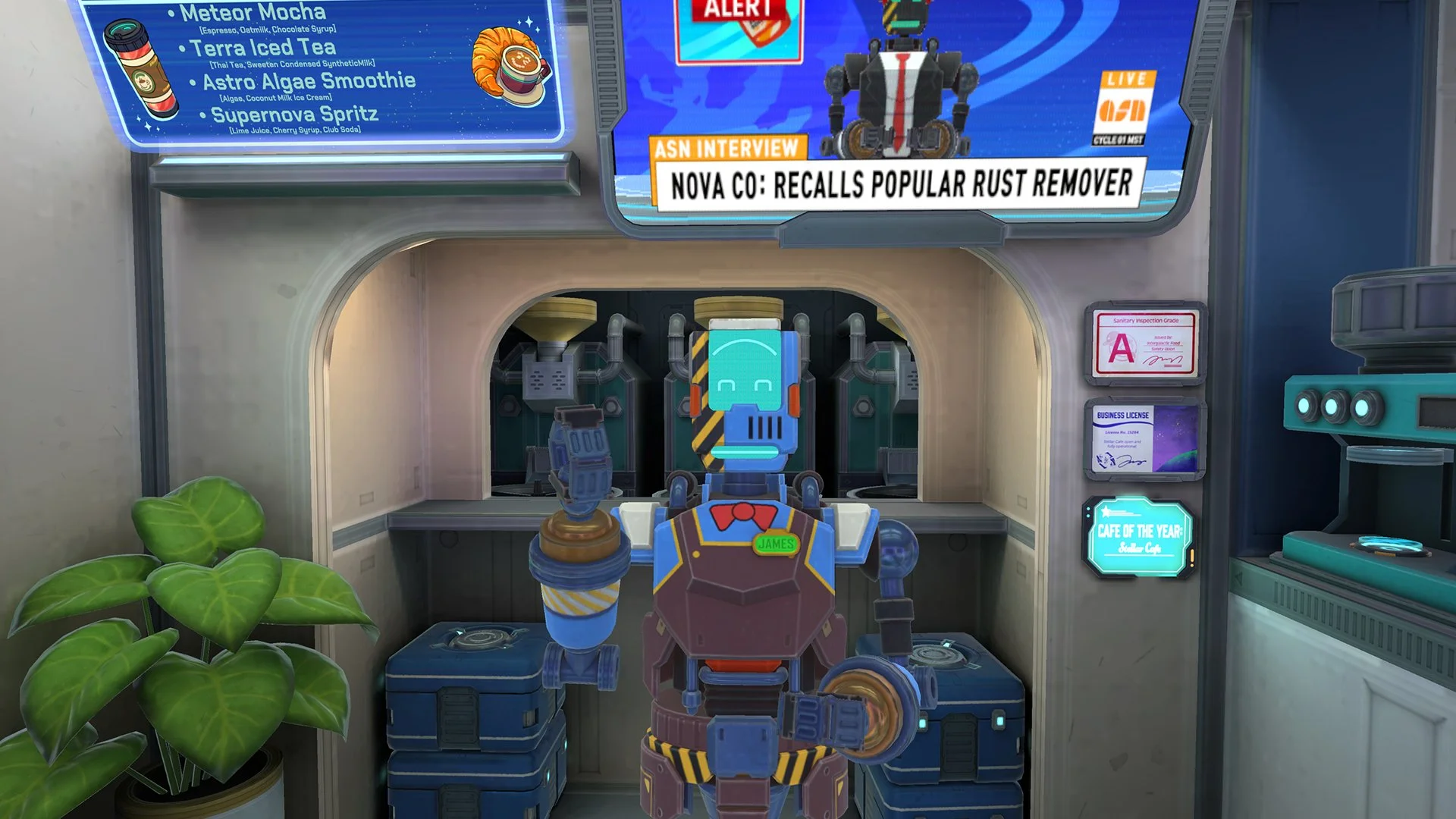

Talking to James in Stellar Cafe

Gotta Respond Fast

A huge technical challenge staring at us from the start was speed. It doesn’t matter how good everything is, if it feels like you are giving commands to a machine we will have failed. We needed to crack conversational response time at scale. This is something most people have never experienced, and definitely haven’t experienced within a game that is also an interactive world.

We determine that for a conversation to actually feel like a conversation the tipping point was around 1.8 seconds (give or take 0.2s), this is time from last utterance to beginning of response speech. Faster is better, but this was the point that people bought in. That said we also discovered expectations are highly variable based on the context of the conversation. For example a 1.8 second response time to “What’s your name?” might feel a bit too long while a 2.5 second response time to “What is the meaning of life?” might feel fine. We also learned it also depends on things like the length of a conversation and level of excitement itself.

Most voice based systems don’t move nearly this fast (3-4 seconds is more common). The reason is there are a lot of pieces that need to work together for any of this to work.

Bring All The Parts Together…Quickly

First off is internet latency. Local models just aren’t powerful enough and fast enough to yield these results yet, so we have no choice but do this work on a server. This has a time cost that is unavoidable so it was important for us to utilize a low latency voice chat transmission. We are using LiveKit for this and utilizing it to help with building our agents.

When the voice arrives we run it through a speech-to-text system. While the speed of these systems have become quite impressive, one challenge stands out: When is the user done speaking? A lot of systems use push-to-talk to simplify this and reduce cost, but this does not feel like a conversation in any way, so that was a non-starter. So instead we need to take an early guess approach. When we receive a spoken transcript with reasonable enough confidence we move to the next step quickly even though there is a chance the user isn’t done speaking. In the case the user isn’t done speaking, we cancel everything and start again. Stellar Cafe is utilizing Deepgram to handle this low latency, high quality speech-to-text.

Now it’s time to run LLM. We need to use an LLM that is fast, inexpensive, and smart enough to make this work. Having two of the three doesn’t work here. For Stellar Cafe Gemini 2.5 Flash was a great fit. We also try to co-locate as many services as possible to improve internal latency. To improve LLM start speed we are constantly rebuilding prompts with the latest context and information (our world is very dynamic) so that everything is ready at a moment's notice. We can’t wait until the LLM needs to run to ask the game for details about the scene, for example. We’ve spent a lot of time refining these dynamic prompts so the LLM has all the context and goals needed while making it as lightweight as possible. We then send this prompt, chat history, and conversational memory off to the LLM. We don’t wait until we get a full response, we wait until we have just enough validated text to speak before moving on to text-to-speech. Once again this can all be interrupted at any point if the user decides to keep speaking.

Text-to-speech is one of the most challenging bits from a latency standpoint. To improve this we break out dialogue into two chunks, an initial chunk (using criteria of how much is enough and where is a good ending point) and then everything else. This allows us to start generating the first bits of audio even before the LLM is done. This method buys us more time to generate the longer remaining part while the NPC is talking. This is an evolving area and we are looking forward to new advancements within it. Stellar Cafe is using Inworld TTS for our high quality, low latency, cost effective voice generation.

Then we pipe the response audio back to the user and they hear a response. But audio is just one piece; there are emotions, animations, actions, gestures, and goals that also are part of this package. One way to improve perceived latency is to start playing animation slightly before the first audio generation is complete (shaving a few more 100ms of milliseconds off the response and it also feels more natural that humans usually move slightly before speaking).

Combine this with other fast systems like looking at targets, nodding, when responses aren’t needed, all play into the feeling of conversational speed. One more super important part is smooth interruptions (but that is a topic for another day).

Building the system to allow Stellar Cafe to be possible was a huge undertaking and this just scratches the surface. We can’t wait for people to try this first-of-its-kind game very soon!

You can wishlist Stellar Cafe today on the Meta Quest store - https://www.meta.com/experiences/stellar-cafe/23951924494476537/